OpenAI and ChatGPT is dominating the LLM space for more than a year now, at least in media and public attention.

While ChatGPT 3.5 was already amazing, version 4.0 is a major step forward. GPT-4 comes with several notable features and capabilities that represent significant advancements over its predecessor. With supposedly 100 trillion parameters, it would surpass 3.5 500 times.

Released in March 2023, GPT-4 shows a better grasp of context, allowing for more relevant and coherent responses. It maintains coherence over longer passages, making it suitable for generating lengthy reports, stories, or detailed explanations. Trained on a more recent dataset, GPT-4 has access to newer information, making its responses more current. It improved in handling complex reasoning tasks, problem-solving, and analytical thinking and exhibits a lower propensity for generating incorrect or nonsensical responses, particularly in nuanced or complex subject areas.

Version 4 is solely available through subscription of currently at a cost of $20 per month.

Now we have the possibility to add knowledge to the model and make it accessible for private or public usage.

Let’s try a hands-on example, building a Vertiport Assistant to answer around eVTOLS and vertiports. It has already common knowledge about the topic.

Now let’s ask something that OpenAI has not scraped, like the FAA EB 105, the Engineering Brief that describes the design of vertiports. The document was released in March 2023 (GPT 4 covers information until April 2023) and is publically accessible at this link.

ChatGPT does not know about it, only provides some indication recognizing the meaning of FAA and EB.

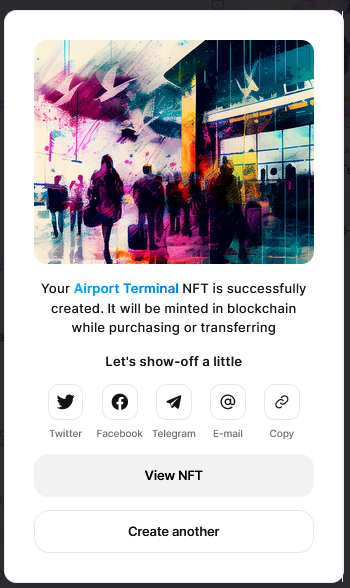

Lets create a GPT and add the PDF file to it.

Note, I also added the EASA equivalent document, the PTS for Vertiports (March 2022) as well disabled web browsing to make sure we rely on uploaded knowledge only.

Now we receive an answer which is more or less a rephrased version of the document.

Asking for more specific informations results in deviations.

We are off by 5mm ?!

Conclusion: With the current version 4 we can integrate ChatGPT easily into other solutions, using its multimodal features and inject our own knowledge. Still, we have to be careful to rely on specific information and values.