What is so amazing about IoT ?

You can get started easily with very little budget to work with microprocessors, single-board-computers and all kinds of electronics, like sensors and more. For the standard kits we discuss here there, lots of online documentation, books and web-sites are available, even interested people with very little IT or electronics knowledge or students at secondary schools can get some hands-on with easy projects.

With a simple workbench, you can do prototyping and evaluate before you even consider going in series, or maybe just build a dedicated one-off device.

Microprocessor and SBC

ESP32

The ESP32 SoC (System on Chip) microcontroller by Espressif is the tool of choice aiming for a small footprint in terms of size (the chip itself measures 7x7mm), power consumption and price. It supports a range of peripherals, I2C, UART, SPI, I2S, PWM, CAN 2.0, ADC, DAC. Wifi 802.11, Bluetooth 4.2 and BLE are already onboard.

The benefits come with limitations though, the chip is operating at 240Mhz and the memory counting in KiB (320 KiB RAM and 448 KIB ROM). Memory consumption has to be designed carefully and a conservative approach towards running the device in various live and sleep modes, it can consume as little as 2.5µA (hibernation) but can draw as well 800mA when everything is running in full swing with Wifi and Bluetooth enabled. The ESP32 and its variants teach you proper IoT design. You can buy the ESP32 as NodeMCU Development Board for less than Euro 10,-.

Arduino

The Arduino history goes back to 2005 when it was initially released by the Interaction Design Institute Ivrea (Italy) as electronics platform for students. Released into the wild as open source hardware for over 15 years, there is a huge user community, plenty of documentation and projects ready to replicate.

The Arduino, even somewhat similar to the ESP32 (Arduino being not as powerful, slower and less memory than the ESP32), is more beginner friendly. The coding is done with sketches (C language) uploaded to the device via USB, logic similar to Processing.

If your project has anything to do with image, video or sound capturing, the Arduino (and the ESP32) is not the right choice, choose the Raspberry Pi as the minimum platform.

The Arduino has a price tag between Euro 10,- to 50,- depending on the manufacturer and specs. For education purpose you find it packaged together with sensors and shields for basic projects.

Raspberry Pi

The Raspberry Pi (introduced 2012) is the tool of choice if you need a more powerful device that runs an OS, can be connected to a screen, supports USB devices, provides more memory and CPU power and easy-to-code features. Connected to a screen (2x HDMI) it can serve as a simple desktop replacement to surf the web, watch movies and do office jobs with LibreOffice for regular user profile.

The current Raspberry Pi 4 ranges between Euro 50,- to 100,- (inclusive of casing and power supply).

Edge or ML Devices

These devices are similar to the Raspberry Pi platform in terms of OS, connectivity, GPIO’s etc, but leaning more towards serious data processing ML inference at the edge.

NVIDIA Jetson

NVDIA launched the embedded computing board in 2014 and has released several new versions since then. The current one is the Nano 2GB Kit, purchase it for less than Euro 70,-. Together with all the free documentation, courses, tutorials this is a small powerhouse which can run parallel neural networks. With the Jetpack SDK it supports CUDA, cuDNN, TensorRT, Deepstream, OpenCV and more. How much cheaper can you make AI accessible on a local device? More info at NVDIA.

Coral Dev Board

The single-board computer to perform high-speed ML inferencing. The local AI prototyping toolkit was launched in 2016 by Google and costs less than Euro 150,-. More info at coral.ai.

Sensors

There is a myriad of sensors, add-ons, shields and breakouts for near endless prototyping ideas. Here are a few common sensors to give a budget indication.

Note (1): There is quite a price span between buying these sensors/shields locally (Germany) and from the source (China), it can be significantly cheaper to order it from the Chinese reseller shops (though it might takes weeks to receive the goods, and worse you might spend time to collect if from the customs office).

Note (2): Look at the specs of the sensors/shields you purchase and check the power consumption (inclusive of low power or sleep modes) and the accuracy.

| GY-68 BMP180 | Air pressure and temperature. |

| SHT30 | Temperature and relative humidity. |

| SDS011 | Dust Sensor (PM2.5, PM10) |

| SCD30 | CO2 |

| GPS | Geo positioning using GPS, GLONASS, Galileo |

| GY-271 | Compass |

| MPU-6050 | Gyroscope, acceleration |

| HC-SR04 | Ultrasonic sensor |

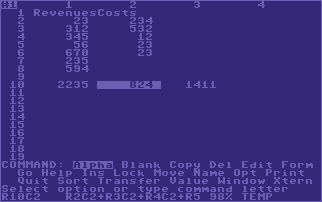

Some devices on the above image: Raspberry Pi4B, Arduino (Mega, Nano), Orange Pi, Google Coral Dev Board, NVIDIA Jetson Nano, ESP32, plus a few sensors/add-on’s like Lidar, LoraWan, GPS, SDS30 (Co2), BMP 180 (Temp, Pressure), PMSA0031 (dust particles PM2.5, PM10), microstepper motor shield.

What else do we need ?

Innovative ideas, curiosity to play, experiment, willingness to fail and succeed with all kinds of projects.

A 3D printer comes in handy to print casings or other mechanical parts.

Next Steps

The step from prototyping in the lab to the mass-production of an actual device is huge, though possible with the respective funding at hand. It makes a big difference to hand-produce one or a few devices that you have full control over and manufacturing, shipping and supporting 10.000’s devices as a product. You have to cover all kinds of certifications (e.g. CE for Europe) and considerations to design and produce the device by a third party (EMS).

Another aspect is the distribution of IoT devices on scale. A device operating in a closed environment. e.g. consumer appliances that solely communicate locally does not require a server backend. Certainly devices deployed at large, e.g. a fleet management system or different type of devices, it is recommended to use one of the IoT platforms in the cloud or locally (AWS, Microsoft, Particle, IBM, Oracle, OpenRemote, and others).

Stay tuned..